Young Children’s Voices in Mathematical Problem Solving

Contributed by Dr Ho Siew Yin and Sng Wei Qin Abbie, from NTUC First Campus, for SingTeach Virtual […]

Read More

The rise of generative AI has been touted as the next great, transformative tech that will revolutionize education, but how much of that is hype, and how much is, or will be reality? At the recent Redesigning Pedagogy International Conference (RPIC) 2024, Professor Mutlu Cukurova from the University College London provides international insights about AI, delving deeper into these questions and sharing more about his vision of a future in which humans and AI systems co-exist synergistically to enrich educational experiences. This article is based on his keynote address titled “Beyond the Hype of AI in Education to Visions for the Future” at the RPIC 2024.

How do we define Artificial Intelligence (AI) today? Modern AI, including recent advancements in generative pre-trained transformers, or GPTs, can be conceptualized in three ways: externalizing, internalizing, or extending human cognition.

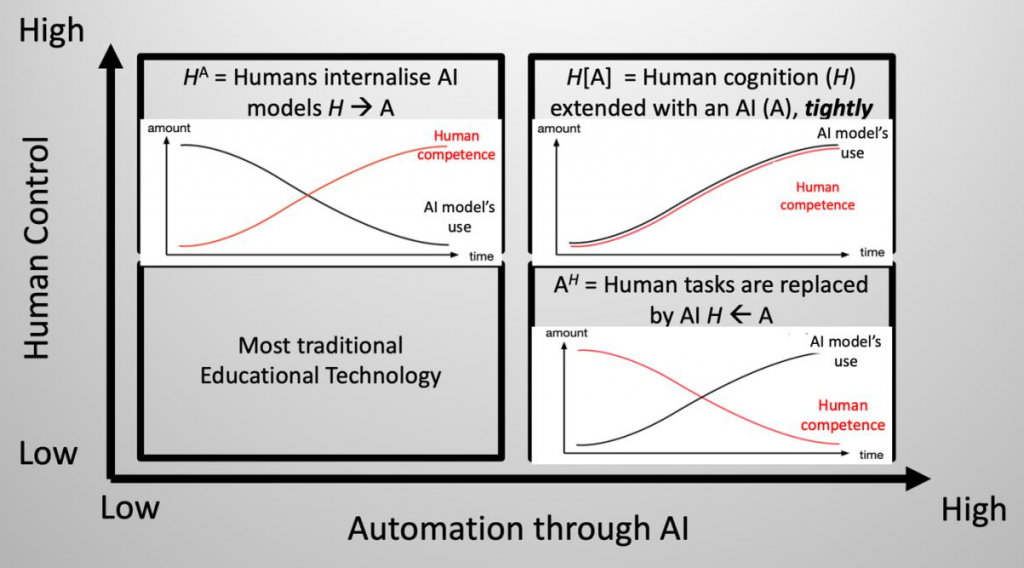

In the externalization of cognition, certain human tasks are defined, modeled and replaced by AI as a tool. These are most of the tools we see today. In the second conceptualization, AI models can help humans change their representation of thought through the internalization of these models. In the third conceptualization, AI models can extend human cognition as part of tightly coupled human and AI systems, where the emergent intelligence is expected to be more than the sum of each agent’s intelligence.

“But the challenge is even bigger than this – is it always possible to explain or predict all aspects of human learning and competence development?”

– Professor Mutlu asks

According to the second conceptualization of AI, AI can be seen as computational models of learning phenomena for humans to internalize and change their representational thought. We can use the data derived to build machine learning classifications of success in learning environments.

For the last decade, my team at University College London (UCL) has been focusing on open-ended learning environments and designing analytics as well as AI solutions that support teachers and learners in such constructivist learning environments. For instance, we have been investigating students engaged in solving open-ended design problems and collecting multiple modalities of data to model their collaborative interactions.

The ultimate goal of such prediction models is to directly intervene in the practice of teaching and learning based on the predictions. However, using AI that directly intervenes presents significant challenges. These issues broadly relate to the threat against human agency, the accuracies of predictions in social contexts, and the normativity issue of not being able to decide what is good or bad in a complex social learning situation. These also include well-documented issues of algorithmic bias, transparency, hallucinations of these models, and the accountability of AI tools’ decisions. But the challenge is even bigger than this – is it always possible to explain or predict all aspects of human learning and competence development?

AI was coined as a term in the Dartmouth College summer school proposal in 1956 based on the conjecture that every aspect of learning or any other future of intelligence can in principle be so precisely described that a machine or any other tool can be built to simulate it. Since then, we have lived under the impression that if only we could find them, there would be formulas and models to predict all aspects of human learning. However, it may be that some aspects of learning just come through the slow experience of living those learning moments. This makes the time spent on them more meaningful, as we can’t just jump ahead to get the answer with predictions telling us what the most productive next step would be in such complex social constructivist learning environments.

On the other hand, if we take the second conceptualization of AI as computational models for humans to internalize, they can be considered as opportunities to describe the learning processes in more precision rather than aiming for the potential impossible task of predicting the future. At UCL, we aim to use these models to describe lived learning experiences in a detailed and precise manner to make these experiences more reasonable for teachers and learners. We use these models to create specific and precise feedback opportunities to improve the awareness of students’ lived experiences and to keep them more motivated to engage in the future.

Teachers and learners find such feedback extremely valuable in terms of increasing their awareness of their lived experiences and others’ awareness in complex social constructivist learning activities. The accountability of this awareness tends to influence their engagement with learning experiences and potentially regulate their behaviours. Therefore, making the lived experiences of learning more visible and explicit with computational models still holds significant value regardless of any prescriptive advice from predictive models.

If we map the three conceptualizations of AI based on Shneiderman’s co-ordinates (see Figure 1), perhaps most traditional education technology could be considered to have very low allowance on human agency and very low automation built into them.

Figure 1. Cukurova’s (2024) framework on the future of AI in Education based on Shneiderman’s co-ordinates. From: “The Interplay of Learning, Analytics, and Artificial Intelligence in Education: A Vision for Hybrid Intelligence,” by M. Cukurova, 2024, arXiv:2403.16081 [cs.CY (https://arxiv.org/pdf/2403.16081).

We have yet to see substantial work in the third conceptualization of AI, that is human cognition being extended with AI in tightly coupled human-AI hybrid intelligence systems, the last corner on the framework that indicates high automation and high human agency. At best, the current complementary paradigm in AI education is to make a better match of what humans can do and what AI can do with the problems to be tackled at hand in order to be able to improve productivity. These approaches aim to improve productivity rather than human intelligence per se. More commonly, we give our agency to an AI system to complete a task, expecting to improve performance and task completion. We must be judicious in selecting the tasks we delegate to AI as overreliance could lead to atrophy of critical competencies in the long term.

To achieve human-AI hybrid intelligence systems, we need AI models that interact fluidly with us, understand our interests dynamically, and change accordingly. Current progress with Open AI GPT for Omni model is a step in the right direction, yet current AI systems still lack the ability to update their models based on real-time interaction data. Rather than pushing their predictions to us, human-AI hybrid intelligence systems would require interactions where AI encourages us to reach our own conclusions by enabling us with relevant information for the task at hand. I hope to see more of these in our community in the future.

Although we have significant technical progress in AI in recent years, real-world pedagogical adoption by practitioners and impact of AI in education are dependent on many other factors including technical infrastructure, school governance, pedagogical culture, teacher training, and assessment structures to count a few. AI solutions in education are not only closed engineering systems but part of a large socio-technical ecosystem. Therefore, based on decades of research in AI in education, I assert that AI tools alone are unlikely to democratize or revolutionize education. Change in education systems is likely to happen gradually, and it is our responsibility as key stakeholders to steer it towards an intentional, evidence-informed and human-centered direction.

“Change in education systems is likely to happen gradually, and it is our responsibility as key stakeholders to steer it towards an intentional, evidence-informed and human-centered direction.”

– Professor Mutlu, on the role key stakeholders play