Young Children’s Voices in Mathematical Problem Solving

Contributed by Dr Ho Siew Yin and Sng Wei Qin Abbie, from NTUC First Campus, for SingTeach Virtual […]

Read More

Contributed by Chan Kuang Wen and Tan Jing Long, from Raffles Institution (Junior College) and Temasek Junior College, respectively, for SingTeach Virtual Staff Lounge

ChatGPT. You may not know what exactly it is but you must have seen or heard what it can do. For starters, ChatGPT is a machine learning model which interacts in a conversational way (Ramponi, 2022). The dialogue format makes it possible for ChatGPT to answer follow-up questions, challenge incorrect premises and even admit its mistakes. Such a powerful tool has naturally created significant cause for concern over plagiarism (Chia, 2023) and its disruption to classroom teaching (Lim, 2022). This begs the question: is ChatGPT a threat to education? Or does it spell the future of education?

It is unlikely that ChatGPT or any other AI language model would cause teachers to become jobless. AI can assist teachers in tasks such as grading papers and creating plans, but it is not capable of replacing the human element of teaching, which includes the ability to form connections with students and provide emotional support. Additionally, AI cannot provide the same level of creativity, spontaneity, and adaptability that a human teacher can. Therefore, it is more likely that AI will augment and assist teachers rather than replace them.

Sounds like a disclaimer? The above paragraph is a verbatim response by ChatGPT to the prompt, “I am a teacher. Will I be rendered jobless by you?” What is impressive is not only the nuance and criticality displayed in its response, but its apparent self-awareness.

Indeed, ChatGPT is a powerful chatbot that can be used as a learning companion to students. For one, it could substitute search engines. Before ChatGPT, search engines such as Google acted as assistants to students, by organizing the vast Internet into a directory of most relevant information. However, students need to click through the links and extract whatever information fit for their purposes. With ChatGPT however, it allows students to obtain a fit-for-purpose answer almost immediately. Furthermore, ChatGPT is able to contextualize each answer to preceding prompts.

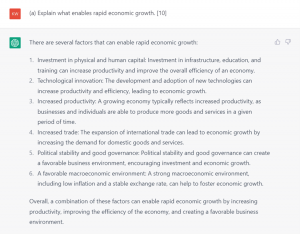

To what extent is ChatGPT a good learning companion to students? We stress tested ChatGPT on two subjects. When asked a quintessential essay question in Economics (Fig. 1), it proposed a robust list of distinct points which students could potentially substantiate.

Figure 1. ChatGPT’s response when it was asked an Economics essay question.

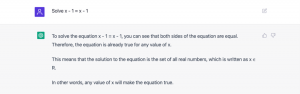

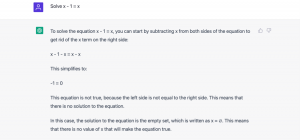

We went a little further for Math and prompted ChatGPT to solve two equations which did not have unique solutions (Fig. 2, Fig. 3). To our amazement, ChatGPT seemingly understood a deeper mathematical concept and was able to make a general statement about the existence of solutions, rather than just solving the equations.

Figure 2. ChatGPT’s response when asked to solve a Math problem with infinite solutions.

Figure 3. ChatGPT’s response when asked to solve a Math problem with no solution.

Whilst it is impressive to see that ChatGPT can provide students with accurate and realistic answers, this raises two immediate questions: (i) Is ChatGPT always accurate?, and (ii) Will students grow to become over-reliant on ChatGPT that they cannot solve problems on their own?

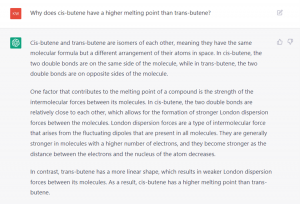

The first question raises two fundamental limitations of ChatGPT. First, ChatGPT delivers on well-posed close-ended questions, i.e., questions which are factual and premised on a factual assumption. However, when asked a question which is presupposed on erroneous facts, it can generate an incorrect response, even confidently. In the following example in Figure 4, ChatGPT confidently answered the question even though the question was incorrect (i.e., cis-butene has a lower melting point than trans-butene whereas ChatGPT supplied an explanation to the contrary). If students are over-reliant on using ChatGPT as a search engine and do not verify their answers, they run into the possibility of learning the wrong facts, or worse still, inculcating misconceptions which may be very difficult to unlearn.

Figure 4. ChatGPT’s response when asked an incorrect question.

ChatGPT does not only occasionally produce a technically inaccurate answer with confidence; it also has the capacity to invent an entire fictitious argument (Smith, 2023) or mechanism which eludes detection from a non-expert. This dangerous phenomenon is known as “hallucination” (“Hallucination [artificial intelligence]”, 2023), and can produce factually inaccurate text which may pass off as credible. Crucially, it implies that the output generated by ChatGPT has to be checked, most of the time, by none other than the user himself/herself.

Second, there is a large variance in the quality of answers supplied by ChatGPT – it occasionally has difficulty in providing new responses (Earley, 2023) to repeated open-ended prompts, especially in providing meaningful details. Consequently, this reduces the re-usability of ChatGPT as a tool.

Notwithstanding the above, ChatGPT has manifold use cases for educators. For one, it can generate captivating lesson plans and help us overcome writer’s block. For instance, if I want to create an escape room activity to teach about Hamlet (Fig. 5), ChatGPT offers a quick storyline for the task. We can then adapt the storyline to suit our needs. In addition, by adding phrases such as “for high school students”, ChatGPT can give age-appropriate lesson ideas.

Figure 5. ChatGPT’s response when asked to design an escape room activity for Hamlet.

Another use case for ChatGPT is its use in the classroom – as a generator of sample students’ responses to tutorial questions, such as essay questions, which students can critique. The advantage of an artificially generated response is that students can be objective in their critique without worrying about the emotional response their peer may have if that essay were to be written by a peer. This creates a safe space and an authentic learning context, especially since writing is much more iterative and collaborative in the real world.

Much of what is written about ChatGPT for teachers is based on user experiments, but what about its nuts and bolts? ChatGPT is a chatbot built on a massive mathematical model, fitted on vast swathes of human text available on the Internet; it predicts the most likely words or sentence that is likely to occur next, given the previous. While ChatGPT has learnt the basic statistical structure of language and appears to be able to reason with the user, simple but carefully designed experiments (Han et al., 2022) (e.g. this set of elementary Physics questions [Sphere, 2022]) reveal that ChatGPT is unable to comprehend logic, or more generally, more complex cognitive tasks of language. Nevertheless, it is worth noting that ChatGPT has been refined with substantial human user input from the preceding model.

We believe that the impact of ChatGPT on education has been exaggerated. As a divergent tool, i.e., for brainstorming or open-ended search, ChatGPT works excellent. However, ChatGPT ought not to be allowed as a writing tool in students’ assignments or assessments. While in classical research, students may paraphrase researchers’ arguments and cite accordingly, traceability is impossible with ChatGPT, made worse by ChatGPT’s ability to cook up fictitious citations.

ChatGPT will only become more powerful – its modalities may extend beyond text and code –but that only reinforces the importance of the human. Once, well-written text provided circumstantial evidence to good scholarship. However, what appears now as well-written text in fact may not have been verified at all. Users of ChatGPT, if untrained or derelict, may pose more of a harm than a good. While the bounds of ethical and appropriate use of ChatGPT in an assignment remains to be determined, one thing is for sure: as students have greater discretion in moral decisions, teachers need to double down on character education.

Some have likened ChatGPT to the invention of a calculator. Like the impact a calculator had on arithmetic, students’ writing abilities may inevitably deteriorate. While we may retreat to closed-book pen-and-paper assessment, perhaps, ChatGPT best epitomizes, and catalyzes, the paradigm shift in 21st century work-readiness from problem-solving to problem-posing.

Han, S., Schoelkopf, H., Zhao, Y., Qi, Z., Riddell, M., Benson, L., Sun, L., Zubova, E., Qiao, Y., Burtell, M., Peng, D., Fan, J., Liu, Y., Wong, B., Sailor, M., Ni, A., Nan, L., Kasai, J., Yu, T., Zhang, R., Joty, S., Fabbri, A. R., Kryscinski, W., Lin, X. V., Xiong, C, and Radev, D. . (2022, September 2). FOLIO: Natural Language Reasoning with First-Order Logic. https://arxiv.org/abs/2209.00840

Chia, O. (2023, January 6). Teachers v ChatGPT: Schools face new challenge in fight against plagiarism. The Straits Times. https://www.straitstimes.com/tech/teachers-v-chatgpt-schools-face-new-challenge-in-fight-against-plagiarism

Ealey, S. (2023, January 25). ChatGPT: Insightful, Articulate, Inconsistent, and Wrong. A Game Changer? Customer Think. https://customerthink.com/chatgpt-insightful-articulate-inconsistent-and-wrong-a-game-changer/

Hallucination (artificial intelligence). (2023, February 24). In Wikipedia. https://en.wikipedia.org/wiki/Hallucination_(artificial_intelligence)

Lim, V. F. (2022, December 16). ChatGPT raises uncomfortable questions about teaching and classroom learning. The Straits Times. https://www.straitstimes.com/opinion/need-to-review-literacy-assessment-in-the-age-of-chatgpt

Ramponi, M. (2022, December 23). How ChatGPT actually works. Assembly AI. https://www.assemblyai.com/blog/how-chatgpt-actually-works/

Smith, N. (2023, January 31). Why does ChatGPT constantly lie? Noahpinion. https://noahpinion.substack.com/p/why-does-chatgpt-constantly-lie

Sphere, L. (2022, January 8). Testing GPT-3 on Elementary Physics Unveils Some Important Problems. Towards Data Science. https://towardsdatascience.com/testing-gpt-3-on-elementary-physics-unveils-some-important-problems-9d2a2e120280